Q: What kind of sonic and visual and forms can be algorithmically generated from text input? What are the aesthetics of these forms?

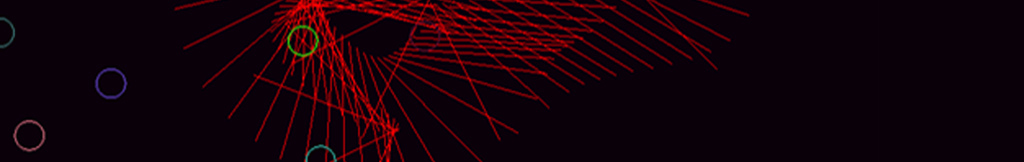

Both sound and visual algorithmic prototypes were developed in Openframeworks employing computer keyboard input. In the sound prototype, text data from a user transforms into a synthesizer. Each character is assigned to a specific frequency. As characters are entered, their ASCII values are added and parsed into words by specially assigned characters such as ‘space’, ‘comma’ and ‘return’. As they appear on the screen as a word, frequencies of all the characters are summed to generate a new tone. As a result, each user generates a set of tones, like a melody with words, which suggests a new relationship between words and sound. The visual prototype creates text art from individual letters. Like the sound application, user’s text input is parsed and displayed on a screen only one word at a time. The word disperses slowly with it composite letters moving in all directions and fades away shortly after. Each moment a new word is generated, it appears in a random location. When multiple users enter text simultaneously, this randomized location function dismantles a sentence from the intended meaning and suggests a new sentence structure or meaning with words around it.